As I have already mentioned Riak_Core is a great framework to use if you like Erlang and have a problem which could be solved with a masterless distributed ring of hosts.

There are several introduction articles about riak_core but I think the initial introduction by Andy Gross, Try Try Try blog by Ryan Zezeski and Riak Core wiki still remain the most useful resources to learn about riak_core.

So why to write this post you might wonder? Because even after having studied every single bit of information about riak_core multiple times I still had tons of questions about how the ring actually worked. It might well be the evidence of my stupidity but it also says something about the quality of Basho’s documentation which … could be better (in contrast with their Erlang code which is beautiful). For me the best way to figure something out is to write it down, so what follows next is more for me than for you – sorry.

Each sector of the ring represents a *primary* partition – this is important, in a stable ring there are no *fallback* partitions. Typically when you save data into the ring you use a write replication factor (usually W=3) and riak will pick a sector on the ring and then walk down the ring and pick up the next two sequential partitions – this is what is called Preflist. As you can see from the picture these partitions will live on different physical nodes and your data will be replicated across multiple physical nodes. All of this is true if you followed Basho’s recommendation and setup your ring correctly.

So the question number one – what happens at a startup of each physical node in the ring? I mean, you built the ring, committed the changes so each physical node in the ring knows exactly what partitions belong to it?

In reality each physical node always starts up *all* the vnodes configured for the ring. If the ring was setup with 64 partitions – each node on startup will create 64 vnodes. It is easy to check – set lager logging level into “debug” and restart some node in the ring, you will see that it starts all the partitions.

21:47:27.551 [debug] Will start VNode for partition 0 21:47:27.561 [debug] Will start VNode for partition 548063113999088594326381812268606132370974703616 21:47:27.562 [debug] Will start VNode for partition 91343852333181432387730302044767688728495783936

After that the newly started node exchange metadata information with the rest of the ring and starts an ownership handoff. When this is done the node will have only vnodes for the primary partitions that it owns – other vnodes processes will be shutdown.

Basically this means that you need to be careful if you want to have some logic in init function of your vnode that needs to execute only once for the primary partition on startup.

The next question – where do the *fallback* partitions come from?

You have your ring up and ready then something goes wrong and one of your physical nodes dies. The docs say your data will go to the *fallback* node but where is it and what is it?

When I initially read the docs I got the incorrect impression that one of the *primary* partitions will step in and accept the data until the failed node with a primary partition recovers. This is wrong.

Lets see what really happens:

I am running 3 node cluster and asking for 3 primary partitions to ping:

(node1@iMac.home)8> riak_core_apl:get_apl(riak_core_util:chash_key({<<"ping">>, <<"test1">>}), 3, magma).

[{730750818665451459101842416358141509827966271488,

'node1@iMac.home'},

{822094670998632891489572718402909198556462055424,

'node2@iMac.home'},

{913438523331814323877303020447676887284957839360,

'node3@iMac.home'}]

now, lets kill node3 which holds partition 913438523331814323877303020447676887284957839360

and run the same command on the same node:

(node1@iMac.home)10> riak_core_apl:get_apl(riak_core_util:chash_key({<<"ping">>, <<"test1">>}), 3, magma).

[{730750818665451459101842416358141509827966271488,

'node1@iMac.home'},

{822094670998632891489572718402909198556462055424,

'node2@iMac.home'},

{913438523331814323877303020447676887284957839360,

'node1@iMac.home'}]

ok, now we got the very similar list but the ring says that the partition 913438523331814323877303020447676887284957839360 now lives on node1 instead of node3.

Really? lets check it, observer on the node1 still shows that the node has only 6 original vnodes it had before.

so, lets run a ping against the {913438523331814323877303020447676887284957839360, ‘node1@iMac.home’}

(node1@iMac.home)12> riak_core_vnode_master:sync_spawn_command({913438523331814323877303020447676887284957839360, 'node1@iMac.home'}, ping, magma_vnode_master).

00:03:34.079 [debug] Will start VNode for partition 913438523331814323877303020447676887284957839360

00:03:34.080 [debug] vnode :: magma_vnode/913438523331814323877303020447676887284957839360 :: undefined

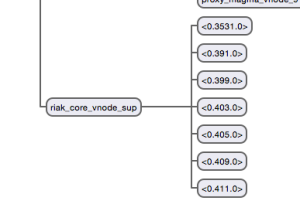

00:03:34.080 [debug] Started VNode, waiting for initialization to complete <0.3531.0>, 913438523331814323877303020447676887284957839360

00:03:34.080 [debug] VNode initialization ready <0.3531.0>, 913438523331814323877303020447676887284957839360

{pong,913438523331814323877303020447676887284957839360}

this is interesting, riak *has started* a new vnode process for us and this vnode replied to the ping.

and sure enough now we can find this process in the observer:

So, this is the *fallback* vnode for the failed primary partition, it has the same partition number but lives on a different physical node and got created auto-magically on the request.

Lets see what happens now when we bring the node3 back to life.

It takes few moments for a new node3 to join the cluster and for the ring to gossip metadata information but when it is done the ring realises that now we have two vnodes for the same partition. This realisation will trigger *hinted* handoff and data (if there were any) will be moved from node1 to the node3 that hosts the primary partition now. After that *fallback* partition on node1 gets deleted.

0:12:13.110 [debug] completed metadata exchange with 'node3@iMac.home'. nothing repaired 00:12:15.344 [debug] 913438523331814323877303020447676887284957839360 magma_vnode vnode finished handoff and deleted. 00:12:15.344 [debug] vnode hn/fwd :: magma_vnode/913438523331814323877303020447676887284957839360 :: undefined -> 'node3@iMac.home' 00:12:15.345 [debug] 913438523331814323877303020447676887284957839360 magma_vnode vnode excluded and unregistered. 00:12:23.113 [debug] started riak_core_metadata_manager exchange with 'node2@iMac.home' (<0.5662.0>)

This is pretty cool and now makes the perfect sense.

Hopefully, this little post will help somebody to learn a little bit more about riak_core.

!!! UPDATE – shortly after I published this post, Valery Meleshkin (@sum3rman) pinged me and sent some awesome slides from the internal talk in his company about “Riak_Core Concepts and Misconceptions”. With his kind permission I am adding these slides here: riak_core

hey, thanks, this is pretty useful!